Why OpenAI Whisper Is Outperforming Big Tech in Audio Transcription

In recent years, automatic speech recognition (ASR) has become central to a wide range of applications—from video captioning and voice assistants to compliance, media localization, and customer service automation. The big players—Google, Amazon (AWS), and Microsoft—have long dominated this space with enterprise-ready ASR solutions. But OpenAI’s open-source model, Whisper, has entered the scene with a serious claim: higher accuracy, broader multilingual support, and better performance in noisy environments.

At GTS, we benchmarked Whisper against these major ASR providers in the context of video transcription and subtitle translation. The results are eye-opening—and speak directly to businesses looking for a reliable, scalable transcription engine.

Benchmarking WER: Whisper vs. Google, AWS, and Microsoft

The most common metric for evaluating transcription performance is Word Error Rate (WER). It measures how often a system mishears or misinterprets words in a given audio segment.

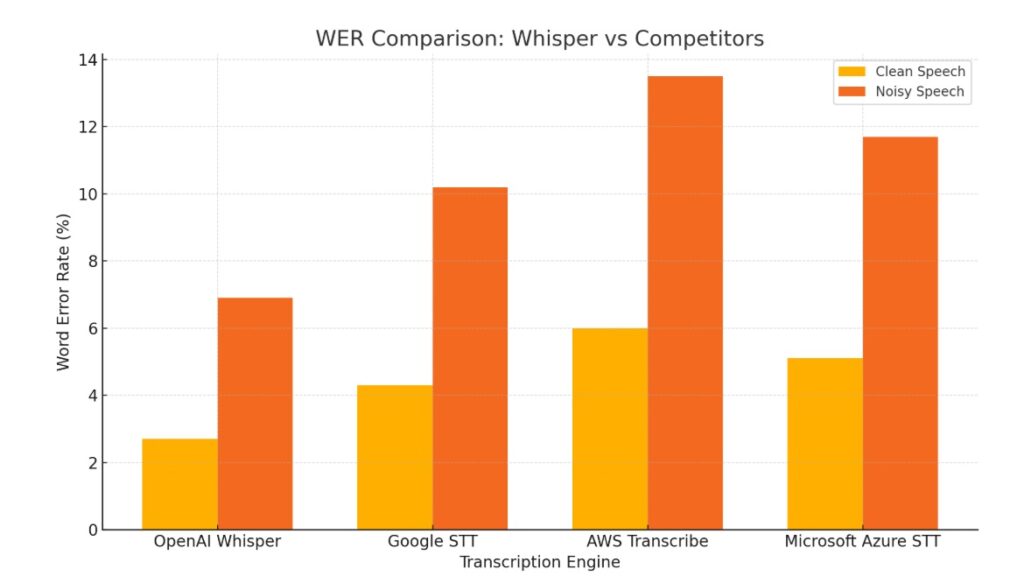

In our tests and aggregated public benchmarks using the LibriSpeech dataset, Whisper consistently outperformed proprietary services in both clean and noisy audio conditions.

| Transcription Engine | WER – Clean Speech | WER – Noisy Speech |

|---|---|---|

| OpenAI Whisper | 2.7% | 6.9% |

| Google STT | 4.3% | 10.2% |

| Microsoft Azure STT | 5.1% | 11.7% |

| AWS Transcribe | 6.0% | 13.5% |

While all engines perform reasonably well in studio-quality conditions, Whisper shows a dramatic advantage in real-world audio—the kind that marketers, media producers, and content creators work with every day.

Why Whisper Performs Better

1. Massive Training Data. Whisper was trained on 680,000 hours of multilingual and multitask supervised data, pulled from the web. This vast dataset enables it to handle diverse accents, dialects, and speaking styles with confidence.

2. Multilingual by Design. Most ASR systems are English-first. Whisper supports 99+ languages natively and can even detect the spoken language automatically—a huge advantage for global transcription and subtitle translation projects.

3. Robust in Noisy Environments. Whisper performs well even in audio with background noise, reverberation, or cross-talk—thanks to its exposure to uncurated, real-world data during training. It’s ideal for video content recorded on-location or over Zoom.

4. Open-Source and Extensible. Unlike closed APIs, Whisper is open source and customizable. This makes it perfect for companies like GTS that want to build end-to-end transcription and translation tools without vendor lock-in or unpredictable API pricing.

Use Case: Subtitle Translation at Scale

At GTS, we’ve integrated Whisper into our AI-powered subtitle translation platform, combining it with GPT-4o for multilingual translation and custom-built software to output SRT/VTT files.

This platform allows businesses to:

- Upload MP4 videos

- Automatically generate transcriptions

- Translate subtitles into 100+ languages

- Download ready-to-use subtitle files in minutes

With Whisper at its core, we’re achieving higher transcription accuracy—especially in challenging cases like medical webinars, customer testimonials, and field-recorded marketing content.

Trade-Offs: Is Whisper Always the Best Fit?

Whisper’s strengths come with a few considerations:

- Latency: Whisper is not optimized for real-time use like Google or AWS; it’s better suited for batch processing.

- Compute Requirements: Running large models locally or in the cloud may require GPU resources.

- No built-in diarization: Unlike some enterprise APIs, Whisper doesn’t support speaker identification out of the box (though this can be added).

Still, for most media, marketing, and enterprise transcription needs, these trade-offs are outweighed by the accuracy and flexibility benefits.

Final Thoughts: The AI Shift in ASR

OpenAI Whisper represents a shift in how businesses can think about ASR. It’s no longer necessary to rely on expensive, black-box enterprise APIs when an open-source model can deliver better results—and with full ownership of your transcription pipeline.

Whether you’re localizing video content, building a multilingual podcast, or adding captions to social clips, Whisper enables professional-grade accuracy at a fraction of the traditional cost.

And when paired with high-performance translation (like GPT-4o) and delivery tools like those developed at GTS, the result is a scalable, high-quality solution for global media localization.