Did you ever phone in to your bank or credit card company? Did you ever call the product support department at a huge tech company like Google or Microsoft? If you did, there is a good chance that your call was answered by a call center employee in a non-English speaking country like India or the Philippines. How can you tell? The easiest way is to ask the person where they are located. They will always answer truthfully. Another way to know without even asking is by listening to the person’s accent. If you hear a foreign accent, chances are that the person is located in a far away land. The location of the call center representative would usually not be an issue if the person on the other end speaks in an intelligible manner. But like most of us know, understanding some call center personnel can be challenging.

If you experience a call which is difficult to understand, one solution is to ask the person at the other end to transfer the call to a native English speaker. The person at the other end will not be insulted, and will transfer the call. The problems with this method are that (a) you may waste a lot of time waiting and (b) a native English call center rep may not be available outside of standard business hours.

But there also may be a new solution on the horizon. A new startup company named Sanas is working on a solution to render accents in real-time. The concept is simple. Take that call center person who speaks with a strange accent and turn the speech into a US or British English accent. Sounds like a great idea and Sanas has already raised a decent amount of seed money to fund development of their concept.

But there also may be a new solution on the horizon. A new startup company named Sanas is working on a solution to render accents in real-time. The concept is simple. Take that call center person who speaks with a strange accent and turn the speech into a US or British English accent. Sounds like a great idea and Sanas has already raised a decent amount of seed money to fund development of their concept.

Cool, but will it work?

Well there may be a few obstacles in their path. First of all, anyone who has spoken to call center people can tell you that the accent is only part of the problem. Many call center people speak a broken English or use poorly constructed sentences. How can an accent matching system deal with that? Another issue is the quality of the speech output. Computer-rendered speech tends to sound like something that comes out of a computer (duh!). Which means that it does not sound like human speech. So while accent matching may improve the customer experience, it may not improve it that much.

How new is the concept?

A simple Google search reveals that Amazon in 2018 filed a patent for a real-time accent translator. How much of Sanas’s $5.5 Million in seed money will be required for protection against an IP infringement suit by Amazon? Amazon has some pretty deep pockets. There probably are other patents as well, as other tech companies have been working for years on real-time speech-to-speech translators with voice rendering.

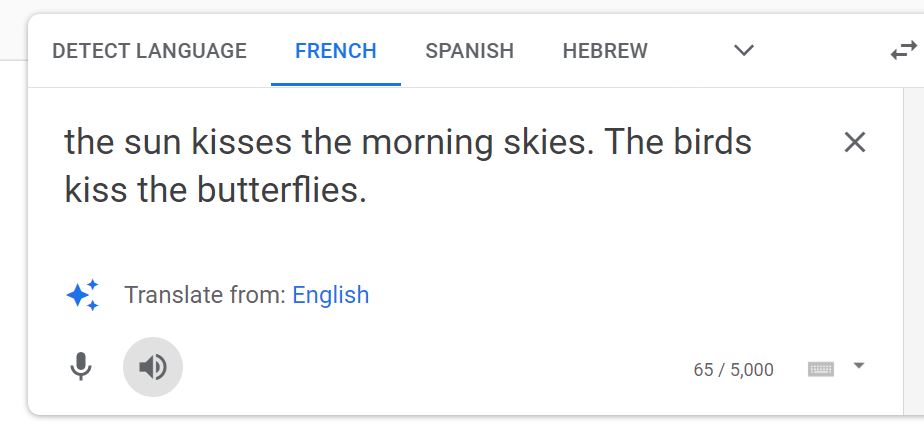

Google Translate also seems to have accent matching built in to Google Translate. Just go to Google Translate and paste in some English text. Select a language and click the text-to-speech button. You will hear the accent rendered in the dialect of choice.

Bottom Line

This is a great idea and it would be great if Sanas is successful. But it appears that they have their work cut out for them.

I like by this idea of Sanas because it is really smart and it seems to me that it will bear its fruits, if it is implemented expertly. Of course, they can face many obstacles and even fail, but I think that this risk can be for any startups no matter what, but developers need to pay attention to different subtleties, trying to take all nuances into account and trying to complete the project in a successful way. I think that it is real and it will benefit many people.